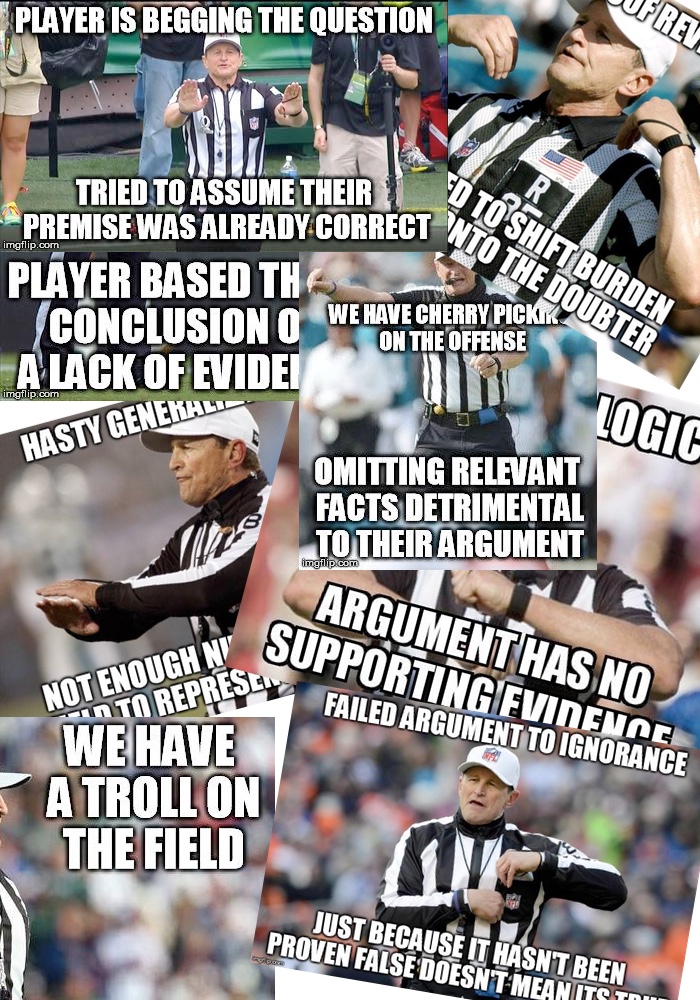

Since first encountering the online world, this picture has described much of my life:

Rhetoric, both spoken and written, can have extremely dangerous consequences.

In online debates, the truth is often concealed through layers of fallacies and oftentimes outright deception.

Advancements in modern artificial intelligence, however, could help steer online conversations in a more honest and productive direction.

Much of the tech that’s powering things like Siri and Google Translate could one day be used to keep track of who was making what arguments and automatically warn you of any logical fallacies. It could also prevent you from making them yourself.

Implementing such a technology would not be easy. It would run the risk of being too annoying, or, if its accuracy depended on some central authority, it could be used as a weapon to shape opinions and beliefs worldwide (the exact opposite of its intended purpose).

Still, the very possibility is intriguing and probably worth exploring. If anything, it could act as a sort of evolution of traditional grammar and spell check technology that we’re already used to.

I’m pretty sure this risks Automation Bias. https://en.wikipedia.org/wiki/Automation_bias

I don’t think so. That would be like saying spell check will make us worse spellers. To have the computer constantly point out your spelling mistakes will make you a better speller when it’s off. At least that’s been my experience.

As I agree that such a “logic helper agent” might become some day reality, I fear that the misuse potential and riks outweight the benefits. It might lead to similar tendencies that our brain delegates work to machines (how many telephone numbers do you still know by heart?). Thought there are for sure intellectual work which can be easier replaced without big damage (like memorizing telephone numbers), outsourcing logical thinking touches the very core of our thinking.

I also think that would only increase the arms race in confrontations, thought positive to get rid of the most annoying and stupid levels, it will not change the reasons why people are trying to obtain power over other people (e.g. due manipulation).

Science in a certain extent works well with that scheme. Without solid logical foundation a scientist will not get far. Though that is only kind of a technical layer. What are the reasons to choose certain topics and not others? There are endless research topics but people choose a certain selection matching the forces which drives them.

What are the driving forces behind that all?

We are very used to exclude psychology as it is so blurry and easily gets somehow dirty. But I think diving into that would let us find much better which forces people drive. If you manage to address those deeper located reasons, the superficial stuff in our daily business will be seen in another light.

And going even further and running the risk to step in even more blurry fields, lets ask ourselves what drives us beyond our emotional and psychological needs.

The philosophical questions why we are motivated to do certain stuff and oppose to others, might throw some light on more existential layers.

I like this idea, and those referee cards; thanks for sharing — someone clearly spent a lot of time on that project! So here we are 7 years later and…

● we have conversational agents and more A.I. writing assistants than we can count

● the functionality that you describe could now be deployed as a browser extension for those who want to improve their critical thinking and debate skills

● Google engineer says an A.I. changed his mind about Asimov’s ‘third law of robotics’

https://mindmatters.ai/2019/09/the-three-laws-of-robotics-have-failed-the-robots/

While I don’t believe that LaMBDA is sentient [because its opinions about a subject will change depending on the personality type it was instructed to model], the ability of an A.I. to provide nuanced insight regarding complex issues might have some practical applications in the construction of better laws & policies — and having an A.I. assistant which can critique your writing on demand could be productive if helps the writer to see angles they might have missed.

Of course one must have a sincere interest in truth AND benevolent motives to use this tool effectively –and in the course of debate, you will still encounter people with selfish intentions, irrational fears, childhood programming, or emotional attachments to belief systems who cannot be swayed by any amount of new evidence or logic. And little can be done for religious fanatics who insist that two contradictory things are both true “because the holy book said so”. But I know many people who are more afraid of ADMITTING that they were wrong than they are of BEING wrong, and they will avoid debate altogether (or change the subject when caught in a contradiction.) Some of those people might be more inclined to engage (or concede that they have lost a debate) if they are speaking privately with a machine that represents the sum of human knowledge — so A.I. affords more opportunities for people to think and change their mind. Considering how factionalized & dysfunctional our society (and government) is, the intellectual exercise seems beneficial so long as the A.I. remains politically neutral and entirely transparent… but that might require an independent, distributed computing platform where the users share CPU resources (because the “mainstream” tools are biased by the agendas of capital.)